How Deep Learning Works In The Stock Market And How to Utilize It for Investment Decisions

The article was written by Yutian Fang, a Financial Analyst at I Know First and Master of Science in Finance candidate at Brandeis International Business School

Summary

- To make informed investment is always what investors are concerned about

- Solutions saw their limitations and improvements as techniques developed

- What Deep Learning can do

-Deep Networks for Unsupervised or Generative Learning

-Deep Networks for Supervised Learning

-Hybrid Deep Networks - How I Know First utilized Deep Learning for investment decisions

To value the company or predict the stock return are major concerns for investors. Investors are trying to find as many indicators as possible that could effectively provide explanatory power for the stock performance, thus making favorable decisions.

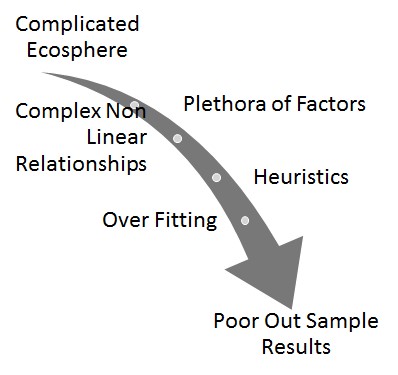

Researchers and analysts have employed various methods to arrive the estimates and techniques never stop advancing. Conventional statistical methods including many regression models have reached to their limitations. Machine learning methods like neural network stepped in to tackle the challenges and could be applied to more practical cases, where factors have nonlinear relationship with each other and assumptions about the statistical distribution are not available to know prior to constructing the models. Nowadays, in the era of big data, the source of information has been enriched and more data like macroeconomic times series and supporting financial indicators are deemed to be informative and valuable for more accurate estimates. On the other hand, the exploration and use of a plethora of data make the construction of model more complex and representation of information more challenging.

Inspired by its work in proven fields of image recognition, text and speech processing and information retrieval, Deep Learning was introduced in the discipline of finance for its advantages in dealing with difficulties faced by shallow architectures when high dimensional and non-linear information being applied. Deep Learning methods use a set of algorithms to develop a layered and hierarchical architecture to learn and represent large scaled data without knowing prior knowledge. Its recurrent structures also can be more readily tweaked to avoid over-fitting when compared to other machine learning methods. The applications of these methods in financial domain thus become far and wide, with more researches and use cases in portfolio construction, risk management and high frequency trading to name a few fields.

Source: towardsdatascience.com

Deep Learning could be categorized into three major classes based on the purposes they are designed for:

Unsupervised or generative learning deep networks aim to capture high-order correlation of unlabeled input data for pattern recognition or synthesis purposes. When being used to characterize joint statistical distributions of the observed data and their associated classes, the networks feature generative mode and could be transformed into discriminative ones for further learning.

Supervised learning networks are used when target label data are available, where the models could directly provide discriminative power for classification purposes.

Hybrid deep networks are the combinations of two types of networks mentioned above so that unsupervised deep networks could provide excellent initialization based on which discrimination could be examined. We will introduce some specific networks under each category and provide their corresponding applications in the domain of finance.

Deep Networks for Unsupervised or Generative Learning

Some deep networks under this category like deep belief networks (DBNs), deep Boltzmann machine (DBM), restricted Boltzmann machine (RBM), etc., have generative capabilities by producing samples from the networks while others like original forms of deep autoencoders are just unsupervised in nature.

A pictorial view of autoencoder’s structure. Source: skymind.ai

Deep autoencoder is a most common example of unsupervised deep neural network, which features conserving and more effectively representing information realized by encoding the original data at the hidden layers from the input feature vectors and creating a more cost effective representation where output layer will match the input layer with the same dimensions. One practical case of constructing autoencoder is to map a financial time series to itself, so the errors of the prediction could be a proxy of a correlation to the market-for stocks they are beta. In this way, based on the results from autoencoders, we could replicate or even train and create an index that outperforms the market.

Deep Networks for Supervised Learning

Supervised learning methods are required to generalize from training data to produce inferred models that can be used in unseen situations. Commonly used techniques that have discriminant power include deep neural networks (DNNs), recurrent neural networks (RNNs), convolutional neural networks (CNNs), etc. A predominant example of supervised Deep Learning is RNNs, which is used as discriminative models when the output is a label sequence associated with the input data. However, this method has difficulties in learning long-term dynamics and faces vanishing gradient problem.

A pictorial view of LSTM cell. Source: towardsdatascience.com

A pictorial view of LSTM cell. Source: towardsdatascience.com

Long short-term memories (LSTMs) are a particular form of RNNs which could help preserve the constant error that can be backpropagated through layers. Embedded with forget gate, input gate and output gate, the gated cells that LSTMs contain could adjust the weights through the iterative processes including backpropagation and help make decisions on storing, reading or writing information outside the normal flow of recurrent networks. The applications of LTSM models in finance field are numerous for the mechanism of memory state is well suited to time-series prediction and outperforms traditional-based algorithms like ARIMA model. They are reliable models as they help to exploit the long-memory effects and memorize volatility patterns amid large amounts of noisy movement.

Hybrid Deep Networks

Considering that deep networks for unsupervised learning may fail to sample from model sometimes and the generative models can greatly improve the training of deep discriminative or supervised-learning models through regularization or optimization, the hybrid deep architecture that either comprises or makes use of both classes of models mentioned above could be used for learning more complex systems. One of the most widely used case is the pre-trained deep neural network (DNN). For example, by stacking a number of restricted Boltzmann machines (RBMs), we could have a layer-by-layer greedy learning procedure to improve a variational lower bound on the likelihood of the training data, thus achieving approximate maximum likelihood learning.

A pictorial view of sampling from a RBM during RBM learning. Source: Deng, L. and Yu, D., 2014. Deep learning: methods and applications.

RBM is composed of one layer of stochastic hidden units and one layer of stochastic visible units and units are connected between two layers, where the hidden variables can be used for input data distribution and there is no label information involved. The pre-trained network could be combined with other, especially discriminative, learning procedures and will produce better results than randomly initializing network on a wide variety of tasks. The dynamic pre-training algorithms will help to improve the accuracy of the predictions, which promises advanced applications to the field of finance.

I Know First has years of experience solving the complex problems via artificial intelligence. Our forecast algorithms are based on our belief that the markets are complex and chaotic and the movement “memory” in the non-linear evolving system can be learned. We utilize Deep Learning methods along with other machine learning techniques to learn the inputs from different sources and apply different valuation models for estimates in different time horizons. Multiple models will be created and tested on 15-year historical data and the models will be renewed as new data are inserted. We provide successful strategies that could capture the market pattern and assist you in making favorable and efficient investing decisions by providing two variables. Signals offer direct suggestions on the stock actions. Predictability is the indicator that we use to know predictable chaos from randomness and make forecasts of the market paradigm changes. We believe our deep understanding of markets accompanied with investors’ risk management strategies will make investing a rewarding and pleasant experience.

References

Heaton, J. B., N. G. Polson, and Jan Hendrik Witte. “Deep Learning in finance.” arXiv preprint arXiv:1602.06561 (2016).

Deng, Li, and Dong Yu. “Deep learning: methods and applications.” Foundations and Trends® in Signal Processing 7.3–4 (2014): 197-387.

Siami-Namini, Sima, and Akbar Siami Namin. “Forecasting Economics and Financial Time Series: ARIMA vs. LSTM.” arXiv preprint arXiv:1803.06386 (2018).

Thushan Ganegedara. “Using LSTMs For Stock Market Predictions (Tensorflow).”

Alexandr Honchar. “Neural networks for algorithmic trading. Correct time series forecasting + backtesting.”

Ravindra Kompella. “Using LSTMs to forecast time-series.”

Sonam Srivastava. “Deep Learning in Finance.”