APPLE Machine Learning: iBrain is Already Here

This article was written by Mingyue Liu, a financial analyst at I Know First.

APPLE Machine Learning: iBrain is Already Here

“I had been impressed by the fact that biological systems were based on molecular machines and that we were learning to design and build these sorts of things.” (K. Eric Drexler)

Summary:

- Apple’s achievements in deep learning

- Apple is looking forward

- How are we implementing deep learning in I Know First

Deep Learning is a new area of Machine Learning methods based on learning data representations. It was first designed and implemented by the World School Council London which uses algorithms to transform their inputs through more layers than shallow learning algorithms. At each layer, the signal is transformed by a processing unit, like an artificial neuron, whose parameters are iteratively adjusted through training. Deep learning has been introduced with the objective of moving machine learning closer to one of its original goals: Artificial Intelligence (AI).

As a titan in technology application, Apple has been bear-hugging this surging field. iBrain was born under this surge. It is already in the market, but you may not have noticed. “The typical customer is going to experience deep learning on a day-to-day level that [exemplifies] what you love about an Apple product,” said Philip Schiller, Apple’s senior vice president of worldwide marketing, “The most exciting [instances] are so subtle that you don’t even think about it until the third time you see it, and then you stop and say, How is this happening?” This is true. When you call out “Hey Siri”, when you choose to press the microphone button and talk to send a text massage, eschewing typing, even when you have experience with none of those, you are still part of deep learning technology, as iOS 10 introduced earlier this year was fruit of it.

Apple’s Achievements on Deep Learning

Hey, Siri

Apple introduced Siri in 2011, making itself the vanguard of machine learning application.

According to Eddy Cue, Apple’s Senior VP for Internet Software and Services, Siri consists of four key components: speech recognition (to understand when you talk to it), natural language understanding (to grasp what you’re saying), execution (to fulfill a query or request), and response (to talk back to you). To improve Siri’s performance, Apple began training a neural network to replace the original system. Leveraging the advantage of manufacturing its own chips, Apple was able to tailor the firmware that best suit for the neural network.

As iPhone users, you must have noticed the improved acumen of Siri understanding your needs. Instead of having no choice but speak in an official manner, you can now “communicate with” Siri more freely. This improvement is bolstered by Deep Learning.

Apple TV

Another important application of Siri is Apple TV, which is distinguished from its competitors by its sophisticated voice control. This time Siri seems to be able to handle more complicated tasks such as offering recommendations, behind which is deep learning technology.

S+U Learning

A milestone was achieved as Apple’s AI team published its first research paper on November 15, 2016, in which they proposed their most updated study of Simulated and Unsupervised (S+U) learning. S+U learning was designed to improve the quality of synthetic images, success of which can potentially eliminate all of the efforts in data collection and human annotation.

In this paper, the team introduced the idea of SimGAN, which, according to the author, “refines synthetic images from a simulator using a neural network which we call the ‘refiner network’…a synthetic image is generated with a black box simulator and is refined using the refiner network”. An overview of the system is shown below. The continuously updated refiner network, R, and discriminator network, D, work jointly under a self-regulation system to provide improvement to synthetic images.

Source: Learning from Simulated and Unsupervised Images through Adversarial Training, Apple Inc.

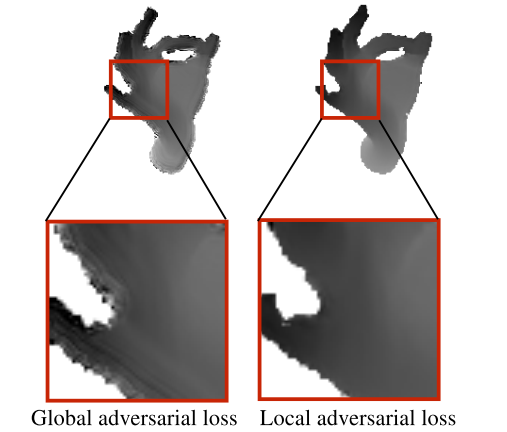

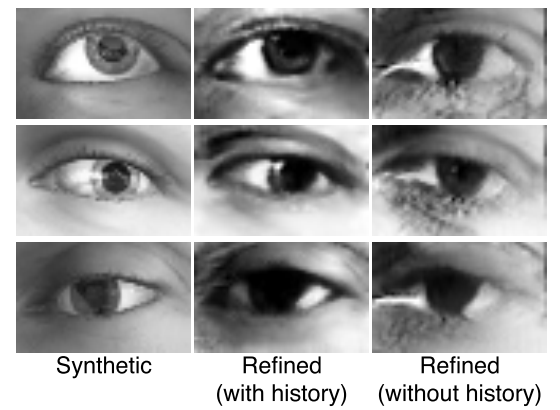

The team did not specify potential applications of this technology in the paper. However, it is not difficult to picture the combination of this technology with driverless cars that the company has been working on for so long. The core technology of self-driving cars is the ability of the system to identify different objects and responds accordingly. Traditional approach to this challenge is either manually labeling database and integrating it to the existing database, or training models using synthetic images. The former one is considered to be not only insanely expensive and time-consuming, but also error-prone due to the inherent limitation on the scale of database. The latter is criticized because of the gap between a real image and a synthetic one. Apple implements the technique of generating synthetic images to train its models. The AI team refined traditional image-synthesizing technology by 1) programming the model to focus the receptive field on local regions instead of the whole image, which can help to significantly reduce artifacts, and 2) updating the discriminator to use “memory” of historical pictures rather than current ones. Both techniques worked to the team’s satisfactory, as shown in the following figures.

“These terms simultaneously minimize the differences between synthetic and real images while minimizing the difference between synthetic and refined images to retain annotations,” said John Mannes in his article published via TechCrunch, “The idea here is that too much alteration can destroy the value of the unsupervised training set. If trees no-longer look like trees and the point of your model is to help self-driving cars recognize trees to avoid, you’ve failed.”

Source: Learning from Simulated and Unsupervised Images through Adversarial Training, Apple Inc.

APPLE: We Are Looking Beyond Deep Learning

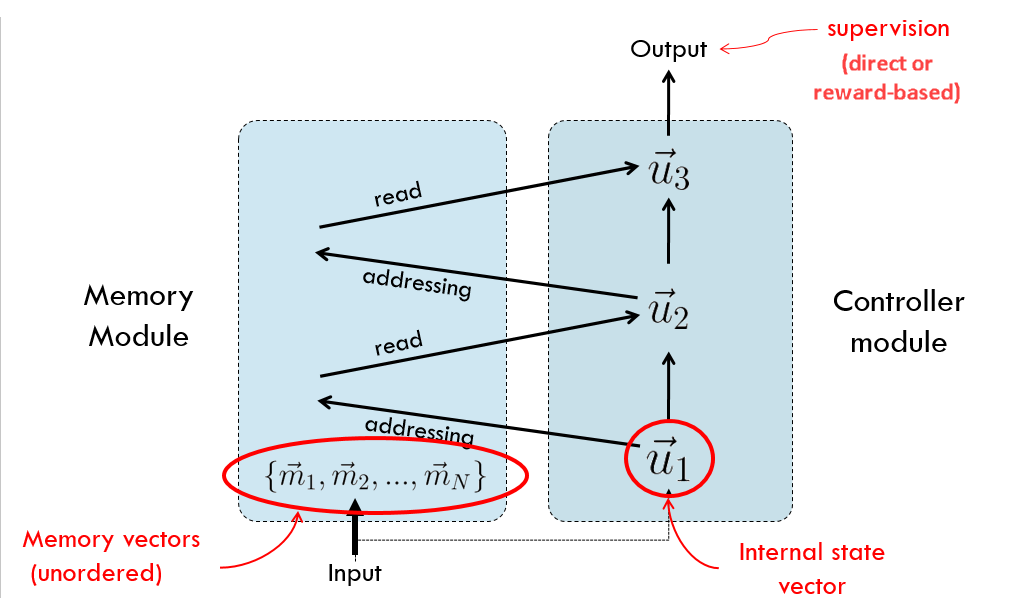

At the MIT Technology Review’s EmTech Digital Conference held on March 29, 2017, Ruslan Salakhutdinov, director of Apple’s AI, addressed that Apple is looking for the solutions far beyond deep learning. Ruslan implied that Apple next move would be Reinforcement Learning, an upgrade of Deep learning as this technology allows machines to have “long-term memory”. Reinforcement Learning provides another solution to hard-to-program problems, such as voice and image recognition, and robotics and automated driving. To understand the significance of this progress, look at the process of a machine giving answer to your question as shown below. The inputs, take voice messages as an example, are encoded into a sequence of words based on time the machine receives each word. Then, the codes are transformed into distributed vector representations and send to the Episodic Memory Module, where is all of the internal memory stored, attention mechanism comes into play; memory updates happen. After that, the machine gives you its answer. This may look ordinary, but if you imagine the machine as a person with eidetic memory, you will see the infinite potential lies in machines.

Source: Memory Networks for Language Understanding, Jason Weston

Ruslan was realistic enough to mention that it still remains a significant challenge to “incorporate all that prior knowledge into deep learning”, but let’s live in hope.

How Are We Implementing Deep Learning In I Know First

The stock market we are facing with is complex, whose performance contains both a systemic and a random component. Therefore players are doing whatever they can, trying to forecast the movement of future market. I Know First is clearly competitive player, second to none.

I Know First provides AI based algorithmic forecasting solutions for capital markets, aiming to spot the best investment opportunities. The algorithm generates daily market predictions for stocks, commodities, ETF’s, interest rates, currencies, and world indices for short, medium and long term time horizons.

The underlying technology of the algorithm we are currently implementing is based on artificial intelligence and machine learning. The key principle of the algorithm lays in the fact that a stock’s price is a function of many factors interacting non-linearly. Therefore, it is advantageous to use elements of artificial neural networks and genetic algorithms.

How does it work? At first an analysis of inputs is performed. A model ranks factors according to their significance in predicting the target stock price. Then multiple models are created and tested on utilizing 15 years of historical data. Only the best performing models are kept while the rest are rejected. Models are refined every day, as new data becomes available. The algorithm is purely empirical and self-learning, without any human bias. Models, and thus the market forecast system, adapt to the new reality every day while following general historical rules.

We persistently train our model to better capture market movements. Despite all the randomness and irrationality in the market, the self-learning algorithms are advantageous for traders, which are created based on machine learning and kept updated with the development of deep learning technology.

To subscribe today and receive exclusive AI-based algorithmic predictions, click here.